🚀 OneFormer

OneFormer是一个在ADE20k数据集(微小版本,Swin主干网络)上训练的模型。它由Jain等人在论文 OneFormer: One Transformer to Rule Universal Image Segmentation 中提出,并首次在 此仓库 发布。该模型可用于解决图像分割领域的相关问题,为图像分割任务提供了高效且通用的解决方案。

🚀 快速开始

OneFormer模型可直接用于语义、实例和全景分割任务。你可以参考下面的使用示例来快速上手。

✨ 主要特性

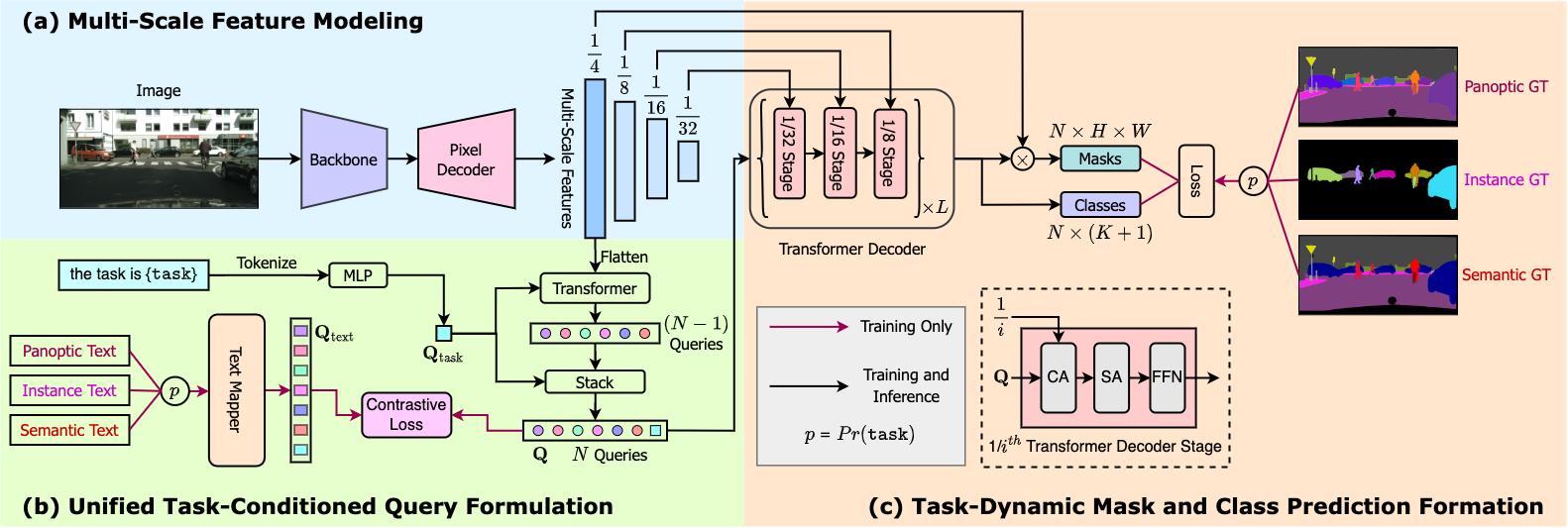

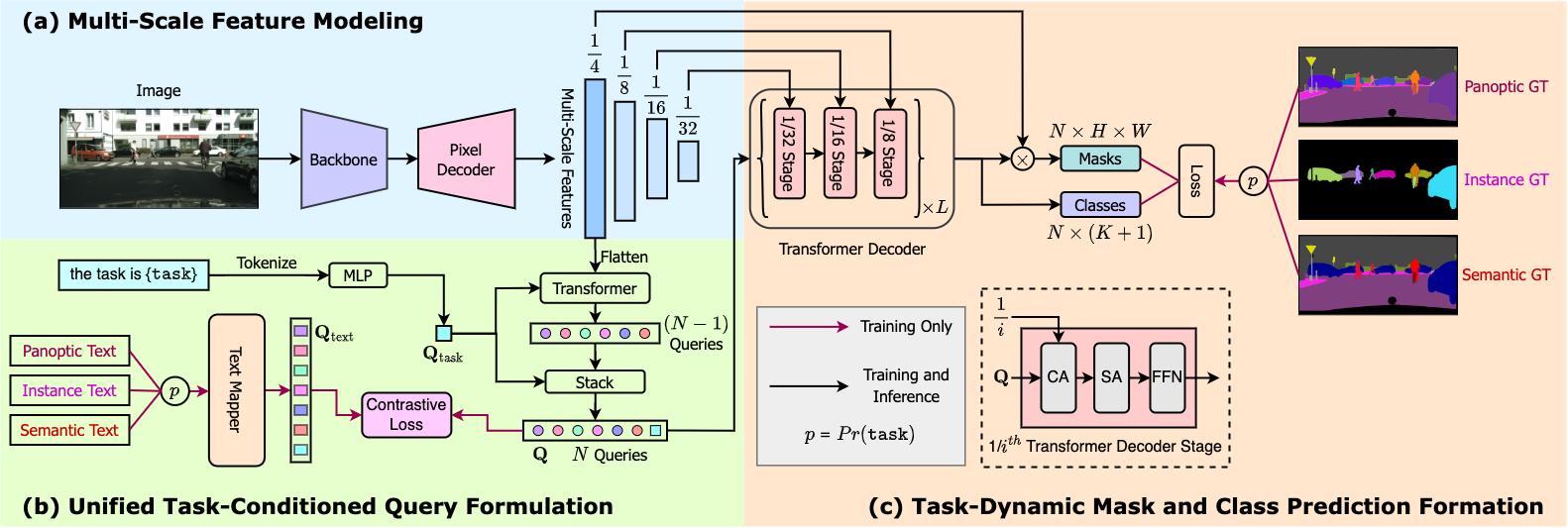

- OneFormer是首个多任务通用图像分割框架。

- 仅需使用单一通用架构、单个模型在单个数据集上进行一次训练,就能在语义、实例和全景分割任务中超越现有的专门模型。

- 使用任务令牌使模型专注于特定任务,使架构在训练时具有任务导向性,在推理时具有任务动态性,且仅需单个模型即可实现。

💻 使用示例

基础用法

from transformers import OneFormerProcessor, OneFormerForUniversalSegmentation

from PIL import Image

import requests

url = "https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/ade20k.jpeg"

image = Image.open(requests.get(url, stream=True).raw)

processor = OneFormerProcessor.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

model = OneFormerForUniversalSegmentation.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

semantic_inputs = processor(images=image, task_inputs=["semantic"], return_tensors="pt")

semantic_outputs = model(**semantic_inputs)

predicted_semantic_map = processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

instance_inputs = processor(images=image, task_inputs=["instance"], return_tensors="pt")

instance_outputs = model(**instance_inputs)

predicted_instance_map = processor.post_process_instance_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

panoptic_inputs = processor(images=image, task_inputs=["panoptic"], return_tensors="pt")

panoptic_outputs = model(**panoptic_inputs)

predicted_semantic_map = processor.post_process_panoptic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

更多示例

更多使用示例,请参考 文档。

📚 详细文档

模型描述

OneFormer是首个多任务通用图像分割框架。它仅需使用单一通用架构、单个模型在单个数据集上进行一次训练,就能在语义、实例和全景分割任务中超越现有的专门模型。OneFormer使用任务令牌使模型专注于特定任务,使架构在训练时具有任务导向性,在推理时具有任务动态性,且仅需单个模型即可实现。

预期用途和限制

你可以使用此特定检查点进行语义、实例和全景分割。如需查找在不同数据集上微调的其他版本,请查看 模型中心。

引用

@article{jain2022oneformer,

title={{OneFormer: One Transformer to Rule Universal Image Segmentation}},

author={Jitesh Jain and Jiachen Li and MangTik Chiu and Ali Hassani and Nikita Orlov and Humphrey Shi},

journal={arXiv},

year={2022}

}

📄 许可证

本项目采用MIT许可证。

📦 模型信息

| 属性 |

详情 |

| 模型类型 |

OneFormer模型(在ADE20k数据集微小版本上训练,Swin主干网络) |

| 训练数据 |

scene_parse_150 |

🖼️ 示例展示

Transformers 支持多种语言

Transformers 支持多种语言 Transformers 支持多种语言

Transformers 支持多种语言 Transformers 英语

Transformers 英语 Transformers 英语

Transformers 英语