Mme5 Mllama 11b Instruct

模型简介

该模型专注于多模态(图像+文本)和多语言嵌入任务,能够将图像和文本映射到统一的嵌入空间,支持跨模态检索和相似度计算。

模型特点

多模态嵌入能力

能够同时处理图像和文本输入,将它们映射到统一的嵌入空间

多语言支持

支持8种语言的文本处理,包括英语、中文、阿拉伯语等

高质量合成数据训练

使用专门设计的合成数据进行训练,提高模型性能

最先进性能

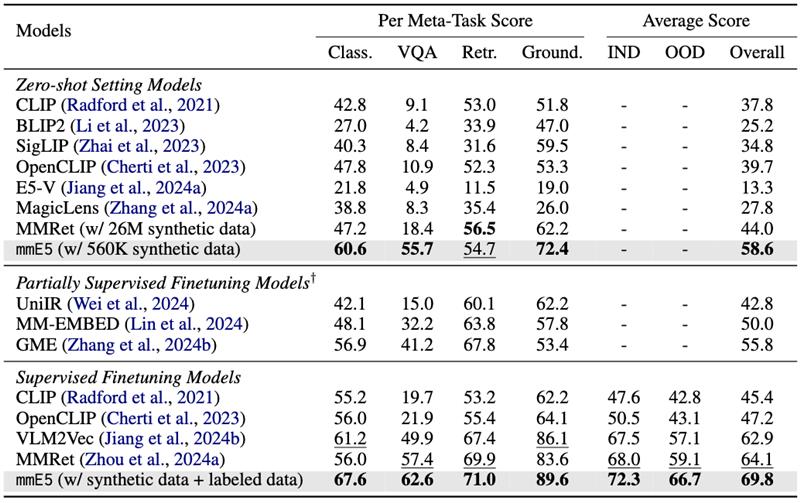

在MMEB基准测试中达到最先进水平

模型能力

图像-文本相似度计算

跨模态检索

多语言文本嵌入

零样本图像分类

使用案例

跨模态检索

图像搜索

通过文本查询检索相关图像

示例中'一只猫和一只狗'的查询与图像匹配度达0.4219

文本搜索

通过图像检索相关文本描述

示例中图像与'一只猫和一只狗'的文本匹配度达0.4414

多语言应用

多语言图像标注

为图像生成多语言描述或标签

🚀 mmE5-mllama-11b-instruct

mmE5-mllama-11b-instruct 模型基于高质量合成数据改进了多模态多语言嵌入,在多模态多语言评估基准(MMEB)上取得了优异的表现。该模型基于 Llama-3.2-11B-Vision 进行训练,为多模态多语言任务提供了强大的支持。

🚀 快速开始

模型信息

- 论文链接:mmE5: Improving Multimodal Multilingual Embeddings via High-quality Synthetic Data。作者包括 Haonan Chen、Liang Wang、Nan Yang 等,发表于 arXiv 2025。

- 基础模型:此模型基于 Llama-3.2-11B-Vision 进行训练。

- GitHub 仓库:Github

训练/评估数据

- 训练数据:

- https://huggingface.co/datasets/intfloat/mmE5-MMEB-hardneg

- https://huggingface.co/datasets/intfloat/mmE5-synthetic

- 评估数据:

- https://huggingface.co/datasets/TIGER-Lab/MMEB-eval

- https://huggingface.co/datasets/Haon-Chen/XTD-10

实验结果

本模型在 MMEB 基准测试中达到了当前最优性能(SOTA)。

💻 使用示例

基础用法

Transformers 库使用示例

以下示例改编自 VLM2Vec。

import torch

import requests

from PIL import Image

from transformers import MllamaForConditionalGeneration, AutoProcessor

# Pooling and Normalization

def last_pooling(last_hidden_state, attention_mask, normalize=True):

sequence_lengths = attention_mask.sum(dim=1) - 1

batch_size = last_hidden_state.shape[0]

reps = last_hidden_state[torch.arange(batch_size, device=last_hidden_state.device), sequence_lengths]

if normalize:

reps = torch.nn.functional.normalize(reps, p=2, dim=-1)

return reps

def compute_similarity(q_reps, p_reps):

return torch.matmul(q_reps, p_reps.transpose(0, 1))

model_name = "intfloat/mmE5-mllama-11b-instruct"

# Load Processor and Model

processor = AutoProcessor.from_pretrained(model_name)

model = MllamaForConditionalGeneration.from_pretrained(

model_name, torch_dtype=torch.bfloat16

).to("cuda")

model.eval()

# Image + Text -> Text

image = Image.open(requests.get('https://github.com/haon-chen/mmE5/blob/main/figures/example.jpg?raw=true', stream=True).raw)

inputs = processor(text='<|image|><|begin_of_text|>Represent the given image with the following question: What is in the image\n', images=[image], return_tensors="pt").to("cuda")

qry_output = last_pooling(model(**inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], inputs['attention_mask'])

string = 'A cat and a dog'

text_inputs = processor(text=string, return_tensors="pt").to("cuda")

tgt_output = last_pooling(model(**text_inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], text_inputs['attention_mask'])

print(string, '=', compute_similarity(qry_output, tgt_output))

## A cat and a dog = tensor([[0.4219]], device='cuda:0', dtype=torch.bfloat16)

string = 'A cat and a tiger'

text_inputs = processor(text=string, return_tensors="pt").to("cuda")

tgt_output = last_pooling(model(**text_inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], text_inputs['attention_mask'])

print(string, '=', compute_similarity(qry_output, tgt_output))

## A cat and a tiger = tensor([[0.3184]], device='cuda:0', dtype=torch.bfloat16)

# Text -> Image

inputs = processor(text='Find me an everyday image that matches the given caption: A cat and a dog.\n', return_tensors="pt").to("cuda")

qry_output = last_pooling(model(**inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], inputs['attention_mask'])

string = '<|image|><|begin_of_text|>Represent the given image.\n'

tgt_inputs = processor(text=string, images=[image], return_tensors="pt").to("cuda")

tgt_output = last_pooling(model(**tgt_inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], tgt_inputs['attention_mask'])

print(string, '=', compute_similarity(qry_output, tgt_output))

## <|image|><|begin_of_text|>Represent the given image. = tensor([[0.4414]], device='cuda:0', dtype=torch.bfloat16)

inputs = processor(text='Find me an everyday image that matches the given caption: A cat and a tiger.\n', return_tensors="pt").to("cuda")

qry_output = last_pooling(model(**inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], inputs['attention_mask'])

string = '<|image|><|begin_of_text|>Represent the given image.\n'

tgt_inputs = processor(text=string, images=[image], return_tensors="pt").to("cuda")

tgt_output = last_pooling(model(**tgt_inputs, return_dict=True, output_hidden_states=True).hidden_states[-1], tgt_inputs['attention_mask'])

print(string, '=', compute_similarity(qry_output, tgt_output))

## <|image|><|begin_of_text|>Represent the given image. = tensor([[0.3730]], device='cuda:0', dtype=torch.bfloat16)

Sentence Transformers 库使用示例

你也可以使用 Sentence Transformers 库,该库对大部分预处理和后处理操作进行了封装。

from sentence_transformers import SentenceTransformer

import requests

# Load the model

model = SentenceTransformer("intfloat/mmE5-mllama-11b-instruct", trust_remote_code=True)

# Download an example image of a cat and a dog

dog_cat_image_bytes = requests.get('https://github.com/haon-chen/mmE5/blob/main/figures/example.jpg?raw=true', stream=True).raw.read()

with open("cat_dog_example.jpg", "wb") as f:

f.write(dog_cat_image_bytes)

# Image + Text -> Text

image_embeddings = model.encode([{

"image": "cat_dog_example.jpg",

"text": "Represent the given image with the following question: What is in the image",

}])

text_embeddings = model.encode([

{"text": "A cat and a dog"},

{"text": "A cat and a tiger"},

])

similarity = model.similarity(image_embeddings, text_embeddings)

print(similarity)

# tensor([[0.3967, 0.3090]])

# ✅ The first text is most similar to the image

# Text -> Image

image_embeddings = model.encode([

{"image": dog_cat_image_bytes, "text": "Represent the given image."},

])

text_embeddings = model.encode([

{"text": "Find me an everyday image that matches the given caption: A cat and a dog."},

{"text": "Find me an everyday image that matches the given caption: A cat and a tiger."},

])

similarity = model.similarity(image_embeddings, text_embeddings)

print(similarity)

# tensor([[0.4250, 0.3896]])

# ✅ The first text is most similar to the image

📄 许可证

本项目采用 MIT 许可证。

📚 引用

如果你使用了本模型,请引用以下论文:

@article{chen2025mmE5,

title={mmE5: Improving Multimodal Multilingual Embeddings via High-quality Synthetic Data},

author={Chen, Haonan and Wang, Liang and Yang, Nan and Zhu, Yutao and Zhao, Ziliang and Wei, Furu and Dou, Zhicheng},

journal={arXiv preprint arXiv:2502.08468},

year={2025}

}

Codebert Base

CodeBERT是一个面向编程语言与自然语言的预训练模型,基于RoBERTa架构,支持代码搜索和代码生成文档等功能。

多模态融合

C

microsoft

1.6M

248

Llama 4 Scout 17B 16E Instruct

其他

Llama 4 Scout是Meta开发的多模态AI模型,采用混合专家架构,支持12种语言的文本和图像交互,具有17B激活参数和109B总参数。

多模态融合 Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言L

meta-llama

817.62k

844

Unixcoder Base

Apache-2.0

UniXcoder是一个统一的多模态预训练模型,利用代码注释和抽象语法树等多模态数据预训练代码表示。

多模态融合 Transformers 英语

Transformers 英语

Transformers 英语

Transformers 英语U

microsoft

347.45k

51

TITAN

TITAN是一个多模态全切片基础模型,通过视觉自监督学习和视觉-语言对齐进行预训练,用于病理学图像分析。

多模态融合 Safetensors 英语

Safetensors 英语

T

MahmoodLab

213.39k

37

Qwen2.5 Omni 7B

其他

Qwen2.5-Omni 是一个端到端的多模态模型,能够感知文本、图像、音频和视频等多种模态,并以流式方式生成文本和自然语音响应。

多模态融合 Transformers 英语

Transformers 英语

Transformers 英语

Transformers 英语Q

Qwen

206.20k

1,522

Minicpm O 2 6

MiniCPM-o 2.6是一款手机端运行的GPT-4o级多模态大模型,支持视觉、语音与直播流处理

多模态融合 Transformers 其他

Transformers 其他

Transformers 其他

Transformers 其他M

openbmb

178.38k

1,117

Llama 4 Scout 17B 16E Instruct

其他

Llama 4 Scout是Meta推出的17B参数/16专家混合的多模态AI模型,支持12种语言和图像理解,具有行业领先性能。

多模态融合 Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言L

chutesai

173.52k

2

Qwen2.5 Omni 3B

其他

Qwen2.5-Omni是一款端到端多模态模型,能够感知文本、图像、音频和视频等多种模态信息,并以流式方式同步生成文本和自然语音响应。

多模态融合 Transformers 英语

Transformers 英语

Transformers 英语

Transformers 英语Q

Qwen

48.07k

219

One Align

MIT

Q-Align是一个多任务视觉评估模型,专注于图像质量评估(IQA)、美学评估(IAA)和视频质量评估(VQA),在ICML2024上发表。

多模态融合 Transformers

Transformers

Transformers

TransformersO

q-future

39.48k

25

Biomedvlp BioViL T

MIT

BioViL-T是一个专注于分析胸部X光片和放射学报告的视觉语言模型,通过时序多模态预训练提升性能。

多模态融合 Transformers 英语

Transformers 英语

Transformers 英语

Transformers 英语B

microsoft

26.39k

35

精选推荐AI模型

Llama 3 Typhoon V1.5x 8b Instruct

专为泰语设计的80亿参数指令模型,性能媲美GPT-3.5-turbo,优化了应用场景、检索增强生成、受限生成和推理任务

大型语言模型 Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言

Transformers 支持多种语言L

scb10x

3,269

16

Cadet Tiny

Openrail

Cadet-Tiny是一个基于SODA数据集训练的超小型对话模型,专为边缘设备推理设计,体积仅为Cosmo-3B模型的2%左右。

对话系统 Transformers 英语

Transformers 英语

Transformers 英语

Transformers 英语C

ToddGoldfarb

2,691

6

Roberta Base Chinese Extractive Qa

基于RoBERTa架构的中文抽取式问答模型,适用于从给定文本中提取答案的任务。

问答系统 中文

R

uer

2,694

98

智启未来,您的人工智能解决方案智库

简体中文